The lean startup by Eric Ries is a very interesting book about creating a successful business.

It’s really a great read even if you are not working in a startup. Actually most large company moved away from previous development processes that didn’t adapt well to the new technologies. In today world, we ship software daily, sometimes multiple times per day. We even have multiple versions of the software being shipped in parallel (AB testing) to find out which version resonate the most with our users. This is, of course, very different than shipping a software on a CD every n year.

Notes

Below are some quotes from the book with some comments.

The fundamental goal of entrepreneurship is to engage in organization building under conditions of extreme uncertainty, its most vital function is learning. […] Learn what customers really want, discover whether we are on a path that will lead to growing a sustainable business.

Behind the word “learning” hides a lot of complexity, it’s about demonstrating empirically that we are going in the right direction. This also means that we should avoid vanity metrics (e.g. instead of tracking the number of visitor on your product page, track the number of visitor actually taking crucial actions within your product).

Our main concerns in the early days dealt with the following questions: What should we build and for whom? What market could we enter and dominate? How could we build durable value that would not be subject to erosion by competition.

Too often we don’t ask ourselves these questions, we are too focus in building a great product (from the engineering perspective) that we lost focus on the important point. The what, whom, how much are questions you should always have in mind.

The effort that is not absolutely necessary for learning what customers want can be eliminated. I call this validated learning because it is always demonstrated by positive improvements in the startup’s core metrics.

The idea is to build the minimum viable product that will confirm whether your product is going in the right direction, as measure by your core metrics. In large company, there is a tax that comes with being part of it. Some of the minimum things that needs to be build are not always relevant to our learning but are present to reinforce the company core values (e.g. security and privacy can to some extend be punted to later by startup, while group from a large company cannot.)

The value hypothesis tests whether a product or service really delivers value to customers once they are using it. […] Growth hypothesis tests how new customers will discover a product or service.

This should be part of your core metrics, are we delivering value? How does our product growth happen? They are the most important leaps of faith questions any startup face.

Answer four questions before investing engineering resources:

- Do consumers recognize that they have the problem you are trying to solve?

- If there was a solution, would they buy it?

- Would they buy it from us?

- Can we build a solution for that problem?

For larger company, there is an additional layer of complexity, there is often a sales organization interacting directly with the customers. Typically, product teams were not directly engaged with the customers. In my current group at Microsoft (Azure), the program managers are directly interviewing with the customers, engaging with them to understand their challenges and to establish great relationship with them.

Build a process of identifying risk and assumptions before building anything and then testing those assumptions experimentally

Use prototypes and interviews, it’s cheaper than building a product!

Build experiment, identify the elements of the plan that are assumptions rather than facts, and figure out way to test them. Using this insights, we could build a minimum viable product.

These reiterate the previous point, the goal is to reduce the cost of building a product that will be liked and used by customers.

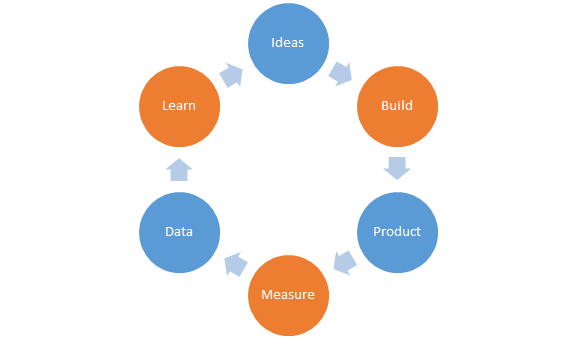

The MVP is that version of the product that enables a full turn of the Build-Measure-Learn loop with a minimum amount of effort and the least amount of development time. […] MVP is designed not just to answer product design or technical questions. Its goal is to test fundamentals business hypothesis. […] A video can be a great form of MVP, a demonstration of how the technology, targeted at a community of technology early adopters.

To perform well within this cycle, your infrastructure needs to support fast iteration. You can’t really build, measure, and learn if it takes you one month to ship bits to production. Moving away from the traditional infrastructure is probably one of the biggest investment a company need to do in order to move to a leaner development cycle. Some fundamentals required:

- Support for fast deployment to production

- Support for Test in Production and experimentation (AB testing)

-

Channel to gather feedback from users and measure their interest.

Traditional approaches such as interaction design or design thinking are enormously helpful.

Give the concierge treatment to your early adopters, learn more and more about what it takes to make the product great. […] Measured according to traditional criteria, this is a terrible system, entirely nonscalable and a complete waste of time. But as a results of the learnings, the development efforts involve less waste than typical.

That’s the whole premise, invest time in learning from your users and make sure you build what they need. Then invest in building what delivers the greatest value for them first. Do things that doesn’t scale.

Most modern business and engineering philosophies focus on producing high-quality experiences for customers as a primary principle. […] These discussions of quality presuppose that the company already knows what attributes of the product the customers will perceive as worthwhile.

If your MVP feels rough to your customers, use it as an opportunity to learn about what they care about. Always ask yourself whether the customer care about design in the same way you do.

The truth is that most managers in most companies are already overwhelmed with good ideas. Their challenge lies in prioritization and execution, and it is those challenges that give a startup a hope of surviving.

A good reading about this is disruptive innovation. If you are part of a larger company, the prioritization effort should come from the learning you made from your users. When working with a lot of talented engineers it is sometime more exciting to solve big technical challenges rather than building the set of features your users need.

A startup’s job is to 1) rigorously measure where it is right now, confronting the hard truths that assessment reveals, and then 2) devise experiments to learn how to move the real numbers closer to the ideal reflected in the business plan.

This is true, not only for startup, but for all companies, not matter where they are in their lifecycle.

The rate of growth depends primarily on three things: the profitability of each customer, the cost of acquiring new customers, and the repeat purchase rate of existing customers.

It’s interesting to focus on rate of growth without being obsess by other cost factor (cost to serve), as the other cost will likely have an economy of scale.

The three learning milestones are: 1) use a minimum viable product to establish real data on where the company is right now, 2) tune the engine (of growth) from the baseline toward the ideal, 3) pivot or persevere.

Step #3 is rarer for larger company, I can’t remember a lot of example where large company pivoted. It seems the outcome of#3 is more fail or persevere.

Funnel metrics: behavior that are critical to your engine of growth (e.g. customer registration, download of application, trial, repeated usage, purchase).

User funnel analysis is so helpful to discover how you are doing and to help identify friction points that deserve your attention. When building new features to your product, always make sure that you can measure easily all the steps leading to using the feature and how the feature is used.

Cohort analysis: instead of looking at cumulative totals or gross numbers such as total revenue or total number of customers, one looks at the performance of each group of customers that comes into contact with the product independently (i.e. customer who joined each month).

Cohort analysis are useful to track the progress of metrics following some changes as well, once you ship new features, you can measure their effects on the cohorts carrying the change.

Metrics should honor the three A’s: Actionable (clear cause and effect), accessible (everyone can understand and access them), auditable (ensure the data is credible).

As soon as we formulate a hypothesis that we want to test, the product development team should be engineered to design and run this experiment as quickly as possible, using the smallest back size that will get the job done. Remember that although we write the feedback loop as build-measure-learn because the activities happen in that order, our planning really works in the reverse order: we figure out what we need to learn and then work backwards to see what product will work as an experiment to get that learning.

In theory that resonate really well to me, in practice however it sounds harder to pull. The development team is often assigned to work on multiple things and have different priorities. This assume that it’s pulling out a team of developer from their ‘normal’ duty is good. I’ve never seen this work successfully in larger and older group.

Technically, more than one engine of growth can operate in a business at a time […] successful startups usually focus one just one engine of growth, specializing in everything that is required to make it work.

Engine of growth: 1) sticky engine (relies on repeated usage), 2) viral engine (network effect), 3) paid engine (to acquire customer).

Andon cord: “Stop production so that production never has to stop”. […] You cannot trade quality for time. Defects cause a lot of rework, low morale, and customer complaints, all of which slow progress and eat away at valuable resources.

Balancing speed and quality is complex, having a system that force in the quality might have a higher cost at first but in the long run it will provide multiple benefits.

Use the Five Whys: this help go to the root of every seemingly technical problem.

But don’t use it to blame people, use it to learn and prevent similar problem to ever happen again. 5 Whys article on Wikipedia.

Conclusion

This book will stay on my shelf, what I like the most about it is:

- Forefront the learning you will have from any development, make sure you can measure your success and failure. Build an MVP.

- Stay in sync with you customers, learn what they need and how they work

- The end goal is to be successful, this will most probably mean failure (learning opportunities) which will require for perseverance or for pivots.

- Avoid vanity metrics, focus on real metrics